Willi Menapace

I am a Senior Research Scientist at Snap Inc. (Creative Vision team) in Santa Monica. My focus is the exploration of large-scale video generation systems for interactive application, specifically leading the creation of the Snap Video line of foundational generators.

Previously, I earned my PhD. from the University of Trento, with research experience at the Max Planck Institute for Informatics and Snap Inc where I have been advised by Elisa Ricci and Sergey Tulyakov, and collaborated with researchers including Nicu Sebe, Christian Theobalt, Aliaksandr Siarohin, and Vladislav Golyanik.

Github LinkedIn Google Scholar

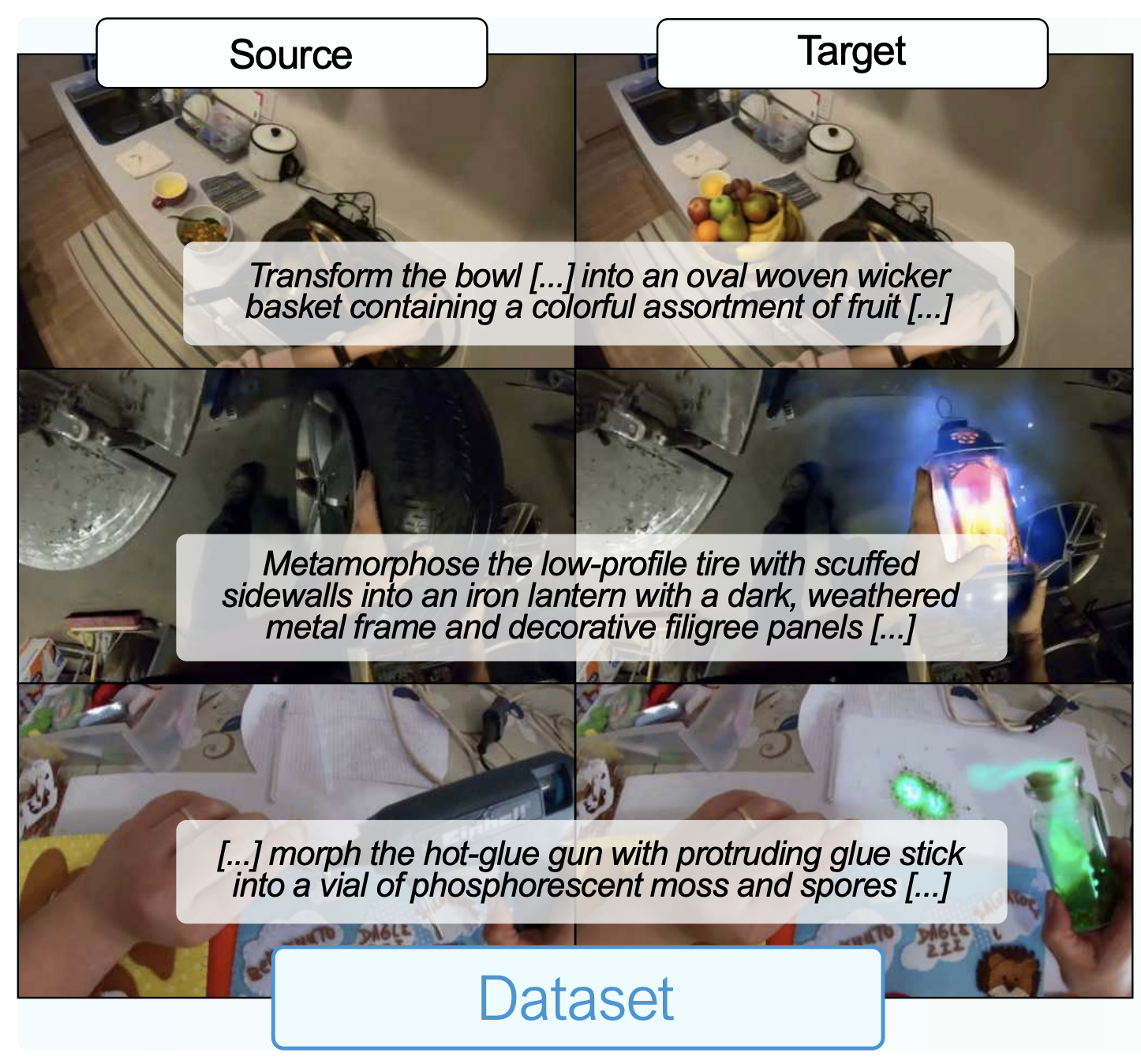

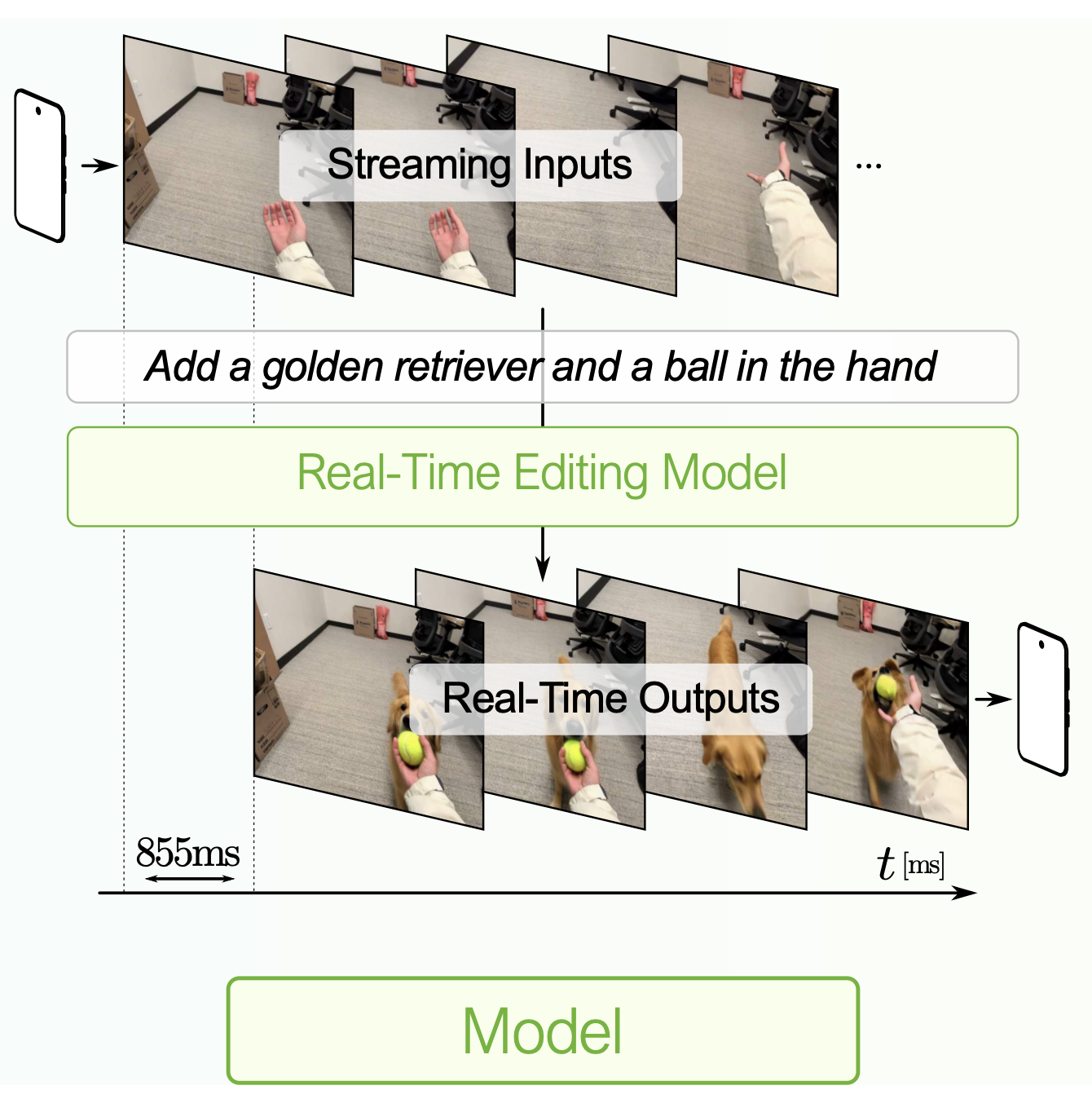

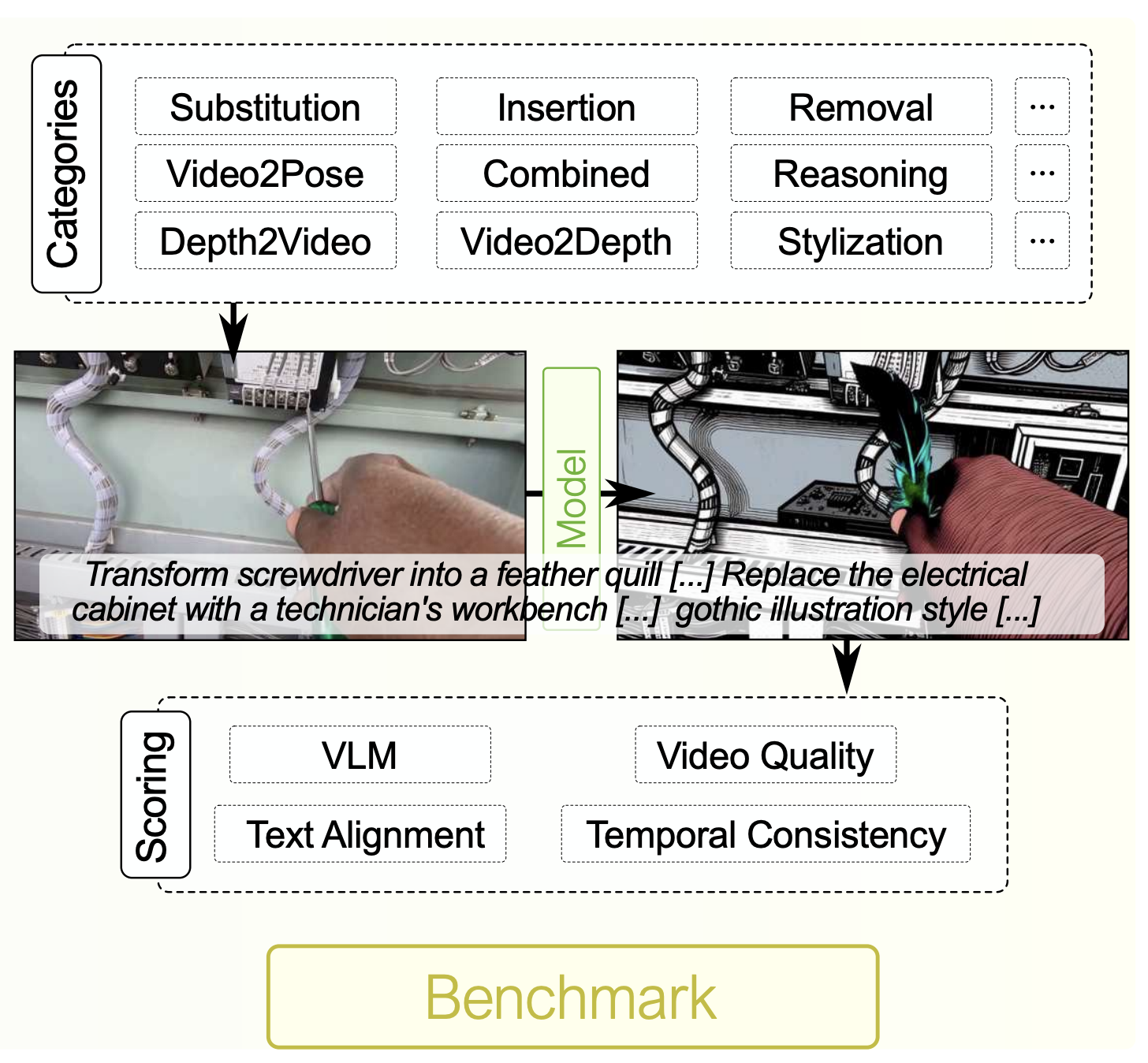

EgoEdit: Dataset, Real-Time Streaming Model, and Benchmark for Egocentric Video Editing

Runjia Li, Moayed Haji-Ali, Ashkan Mirzaei, Chaoyang Wang, Arpit Sahni, Ivan Skorokhodov, Aliaksandr Siarohin, Tomas Jakab, Junlin Han, Sergey Tulyakov, Philip Torr, Willi Menapace

We study instruction-guided editing of egocentric videos for interactive AR applications. While recent AI video editors perform well on third-person footage, egocentric views present unique challenges - including rapid egomotion and frequent hand-object interactions - that create a significant domain gap. Moreover, existing offline editing pipelines suffer from high latency, limiting real-time interaction. To address these issues, we present a complete ecosystem for egocentric video editing. ...

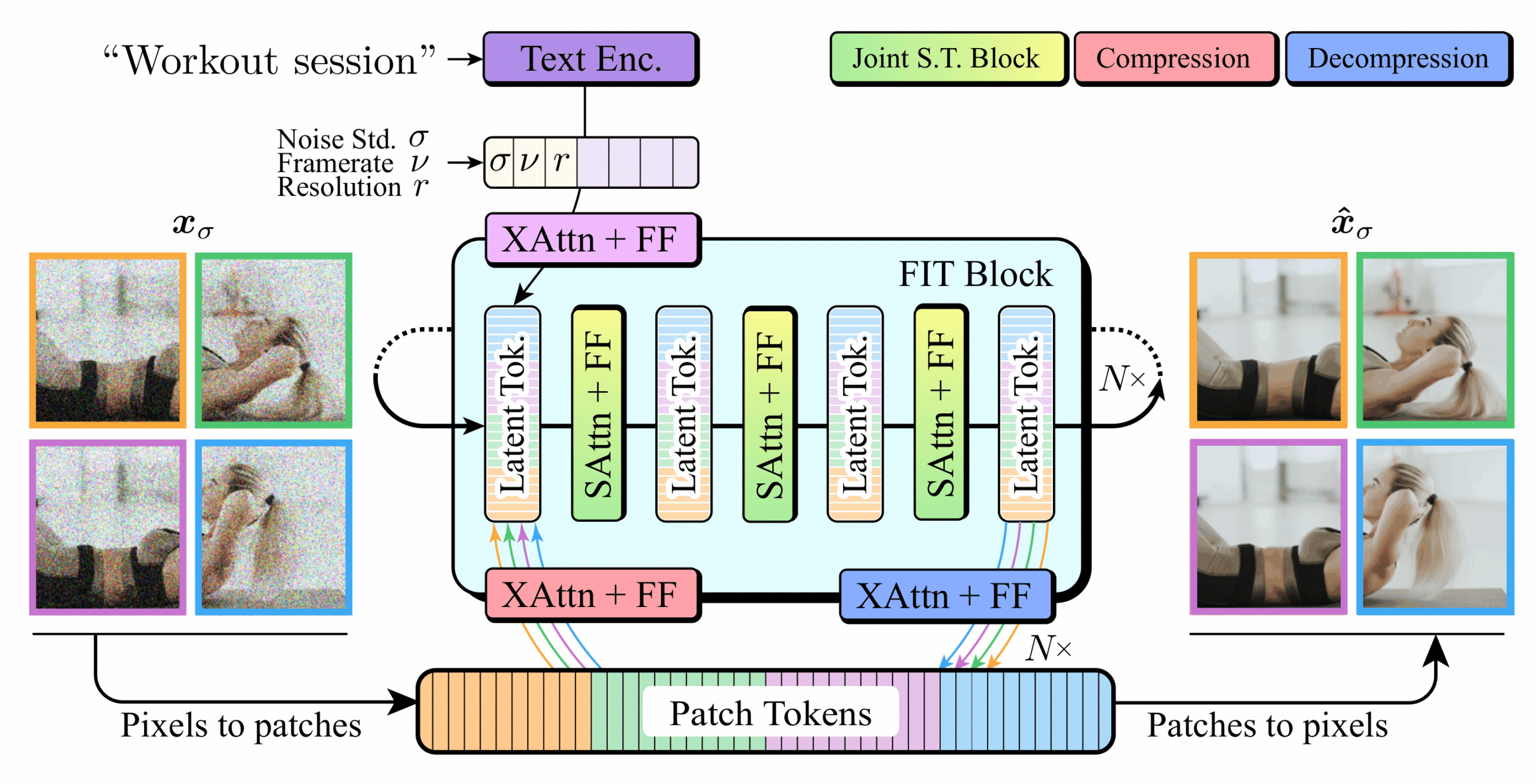

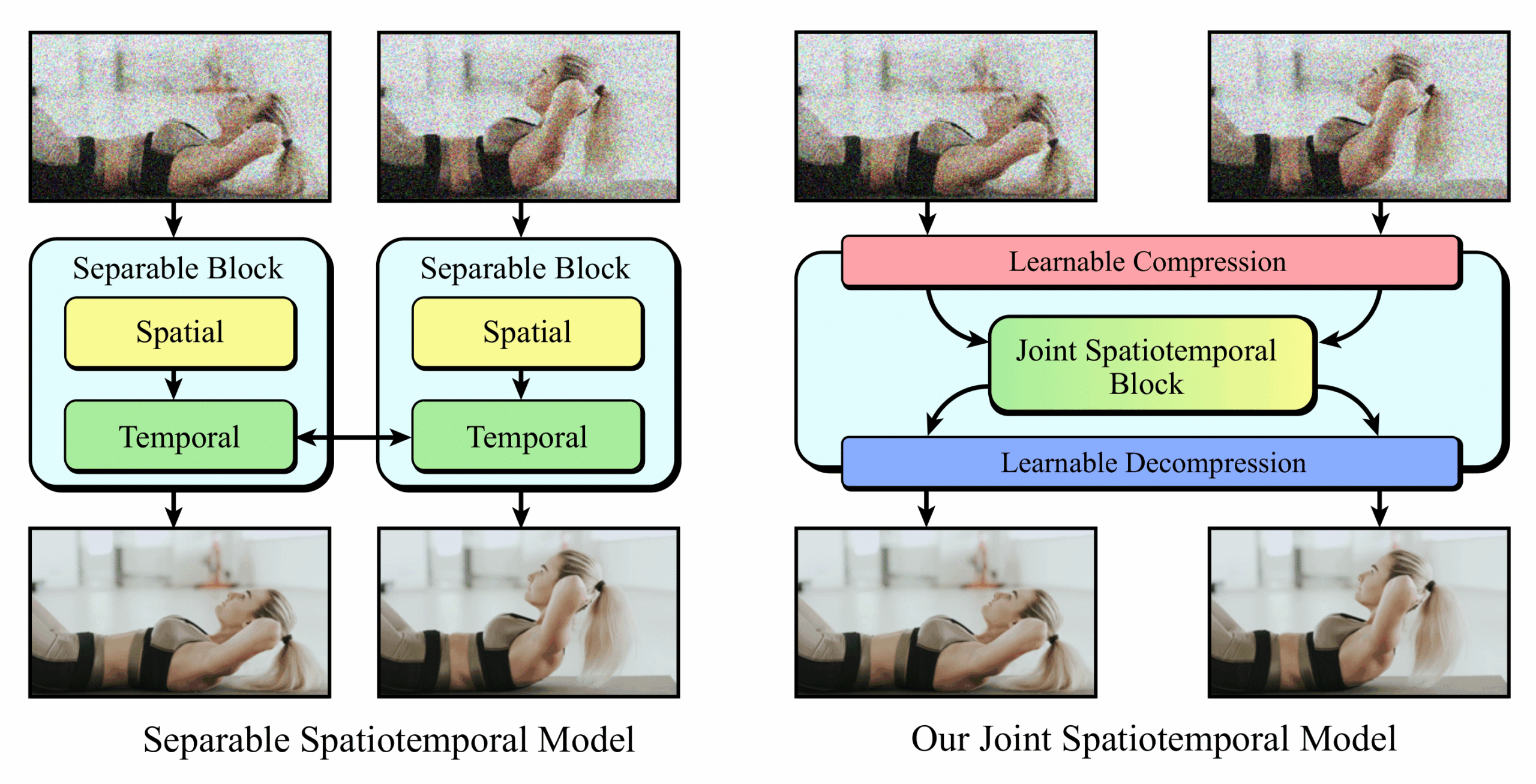

Snap Video: Scaled Spatiotemporal Transformers for Text-to-Video Synthesis

Willi Menapace, Aliaksandr Siarohin, Ivan Skorokhodov, Ekaterina Deyneka, Tsai-Shien Chen, Anil Kag, Yuwei Fang, Aleksei Stoliar, Elisa Ricci, Jian Ren, Sergey Tulyakov

CVPR 2024 (Highlight)

Contemporary models for generating images show remarkable quality and versatility. Swayed by these advantages, the research community repurposes them to generate videos. Since video content is highly redundant, we argue that naively bringing advances of image models to the video generation domain reduces motion fidelity, visual quality and impairs scalability. In this work, we build Snap Video, a video-first model that systematically addresses these challenges. ...

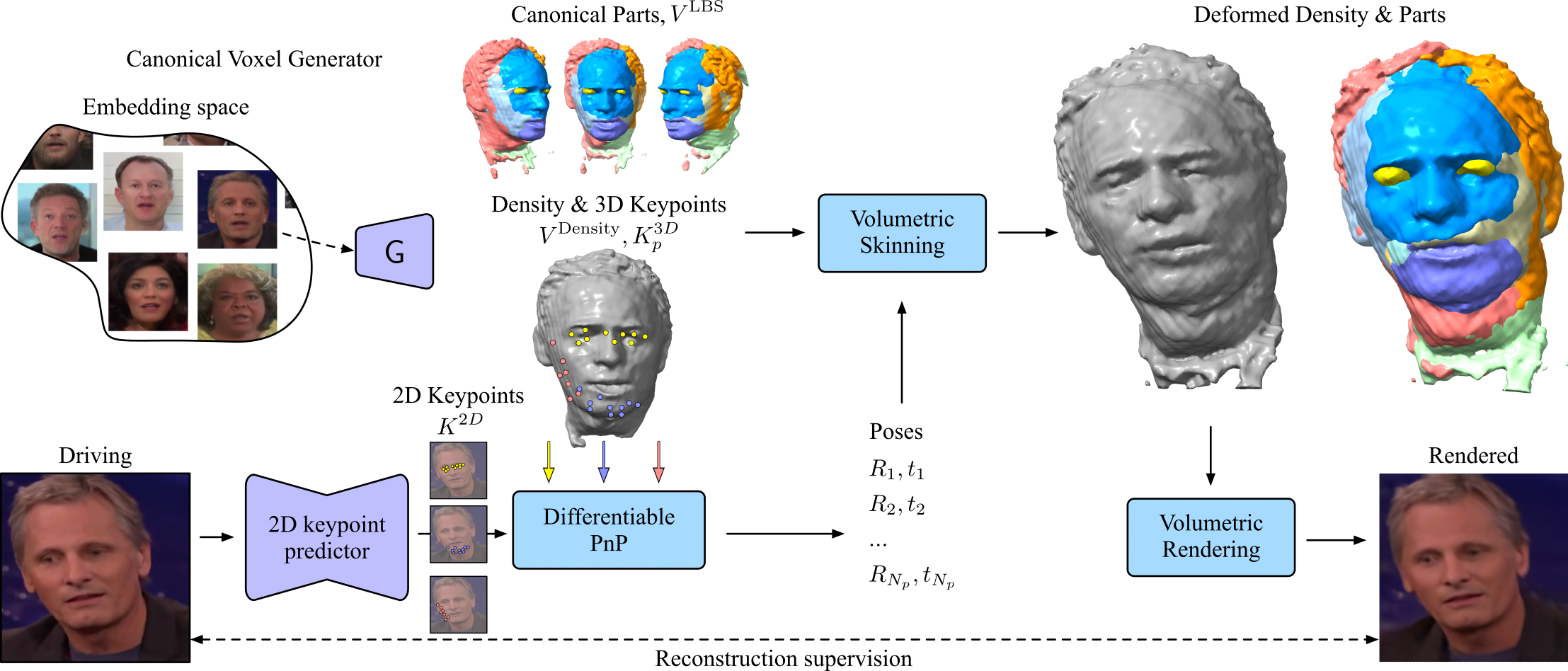

Unsupervised Volumetric Animation

Aliaksandr Siarohin, Willi Menapace, Ivan Skorokhodov, Kyle Olszewski, Hsin-Ying Lee, Jian Ren, Menglei Chai, Sergey Tulyakov

CVPR 2023

We propose a novel approach for unsupervised 3D animation of non-rigid deformable objects. Our method learns the 3D structure and dynamics of objects solely from single-view RGB videos, and can decompose them into semantically meaningful parts that can be tracked and animated. Using a 3D autodecoder framework, paired with a keypoint estimator via a differentiable PnP algorithm, our model learns the underlying object geometry and parts decomposition in an entirely unsupervised manner. This allows it to perform 3D segmentation, 3D keypoint estimation, novel view synthesis, and animation. ...

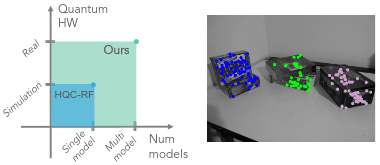

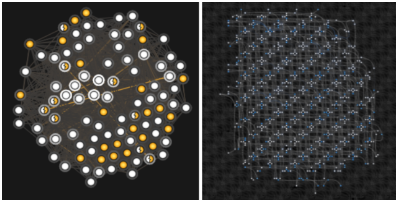

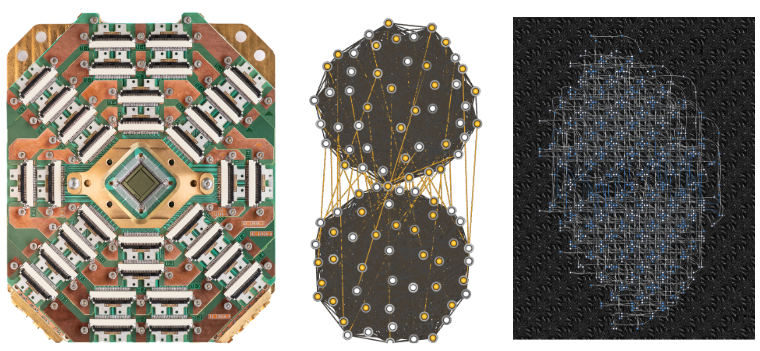

Quantum Multi-Model Fitting

Matteo Farina, Luca Magri, Willi Menapace, Elisa Ricci, Vladislav Golyanik, Federica Arrigoni

CVPR 2023 (Highlight)

Geometric model fitting is a challenging but fundamental computer vision problem. Recently, quantum optimization has been shown to enhance robust fitting for the case of a single model, while leaving the question of multi-model fitting open. In response to this challenge, this paper shows that the latter case can significantly benefit from quantum hardware and proposes the first quantum approach to multi-model fitting (MMF). We formulate MMF as a problem that can be efficiently sampled by modern adiabatic quantum computers without the relaxation of the objective function. We also propose an iterative and decomposed version of our method, which supports real-world-sized problems. The experimental evaluation demonstrates promising results on a variety of datasets.

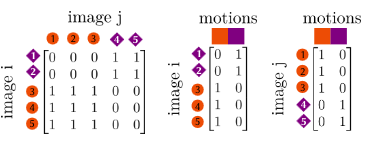

Quantum Motion Segmentation

Federica Arrigoni, Willi Menapace, Marcel Seelbach Benkner, Elisa Ricci, Vladislav Golyanik

ECCV 2022

Motion segmentation is a challenging problem that seeks to identify independent motions in two or several input images. This paper introduces the first algorithm for motion segmentation that relies on adiabatic quantum optimization of the objective function. The proposed method achieves on-par performance with the state of the art on problem instances which can be mapped to modern quantum annealers.

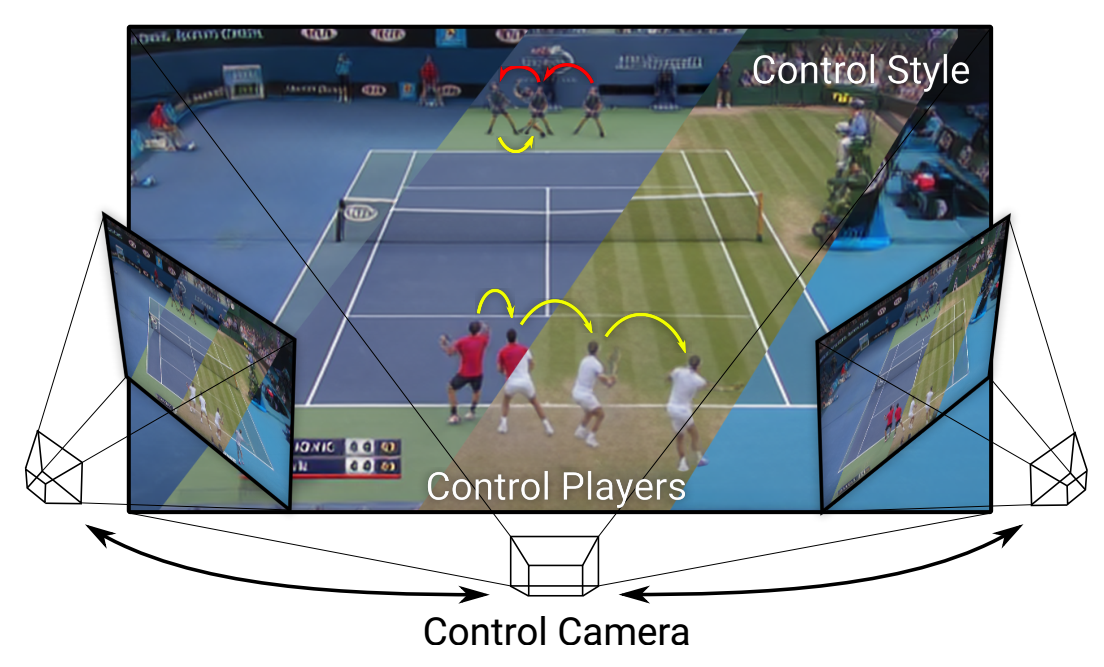

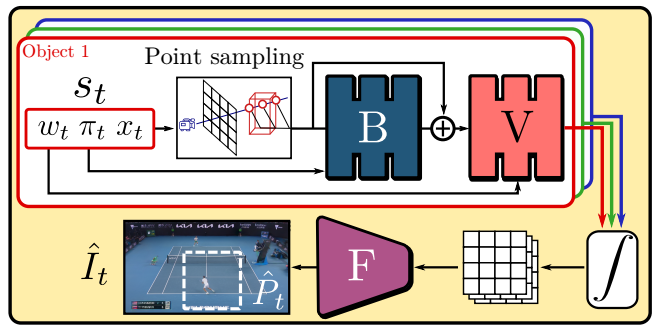

Playable Environments: Video Manipulation in Space and Time

Willi Menapace, Stéphane Lathuilière, Aliaksandr Siarohin, Christian Theobalt, Sergey Tulyakov, Vladislav Golyanik, Elisa Ricci

CVPR 2022

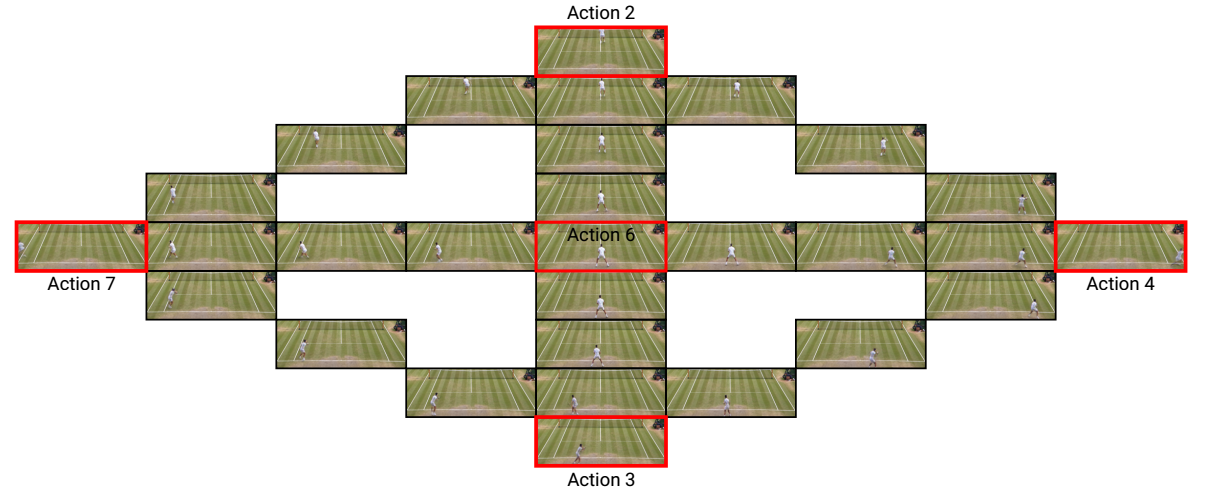

We present Playable Environments - a new representation for interactive video generation and manipulation in space and time. With a single image at inference time, our novel framework allows the user to move objects in 3D while generating a video by providing a sequence of desired actions. The actions are learnt in an unsupervised manner. The camera can be controlled to get the desired viewpoint. Our method builds an environment state for each frame, which can be manipulated by our proposed action module and decoded back to the image space with volumetric rendering. To support diverse appearances of objects, we extend neural radiance fields ...

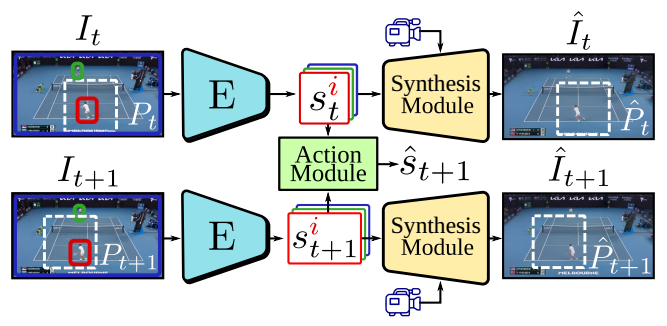

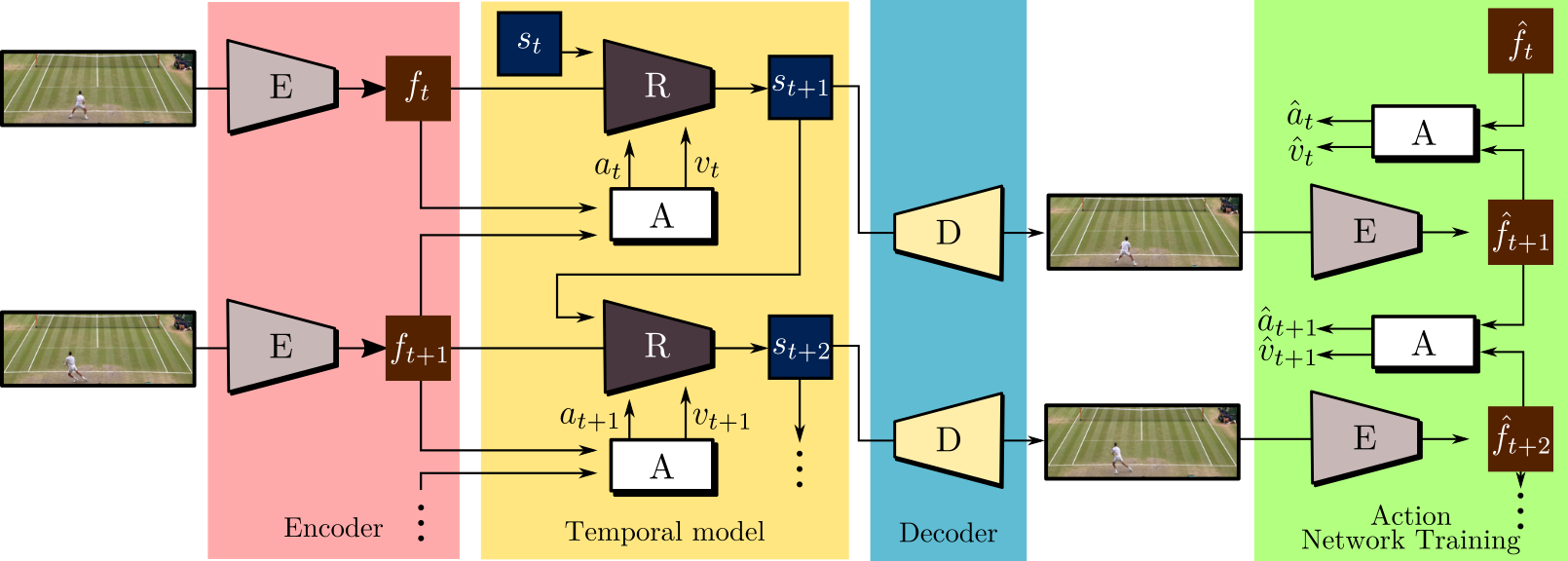

Playable Video Generation

Willi Menapace, Stéphane Lathuilière, Sergey Tulyakov, Aliaksandr Siarohin, Elisa Ricci

CVPR 2021 (Oral)

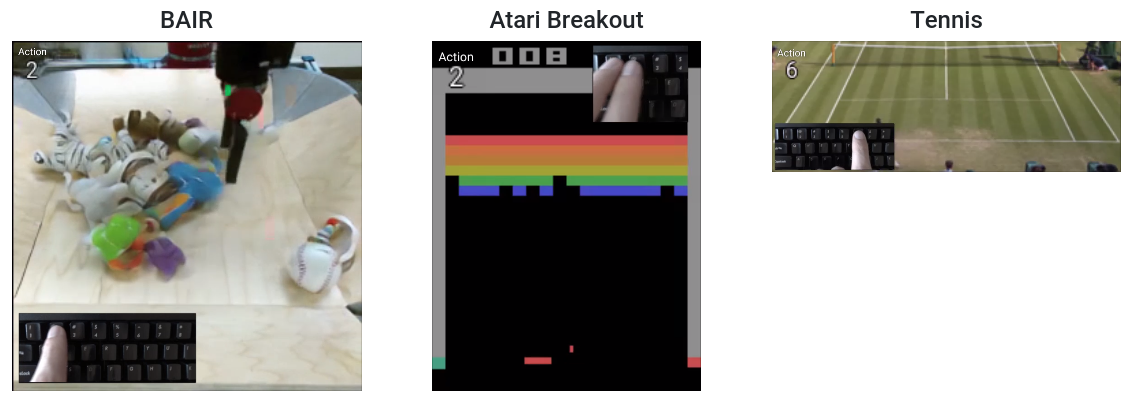

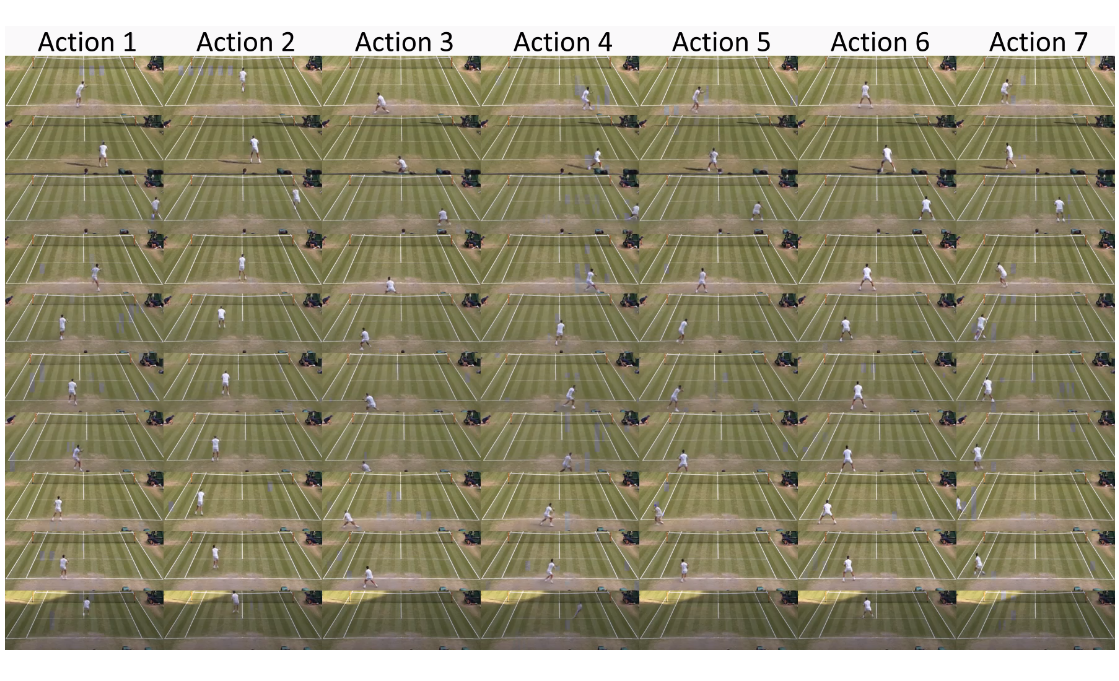

This paper introduces the unsupervised learning problem of playable video generation (PVG). In PVG, we aim at allowing a user to control the generated video by selecting a discrete action at every time step as when playing a videogame. The difficulty of the task lies both in learning semantically consistent actions and in generating realistic videos conditioned on the user input. We propose a novel framework for PVG that is trained in a self-supervised manner on a large dataset of unlabelled videos. We employ an encoder-decoder architecture where the predicted action labels act as ...

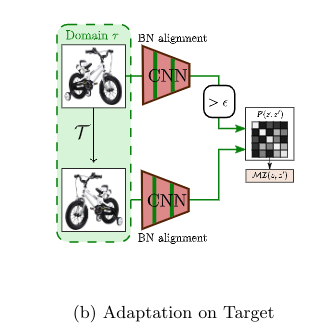

Learning to Cluster under Domain Shift

Willi Menapace, Stéphane Lathuilière, Elisa Ricci

ECCV 2020

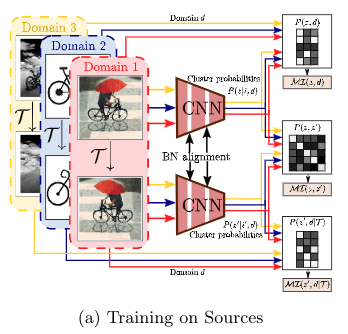

While unsupervised domain adaptation methods based on deep architectures have achieved remarkable success in many computer vision tasks, they rely on a strong assumption, i.e. labeled source data must be available. In this work we overcome this assumption and we address the problem of transferring knowledge from a source to a target domain when both source and target data have no annotations. Inspired by recent works on deep clustering, our approach leverages information from data gathered from multiple source domains to build a domain-agnostic clustering model which is then refined at inference time when target data become available. ...

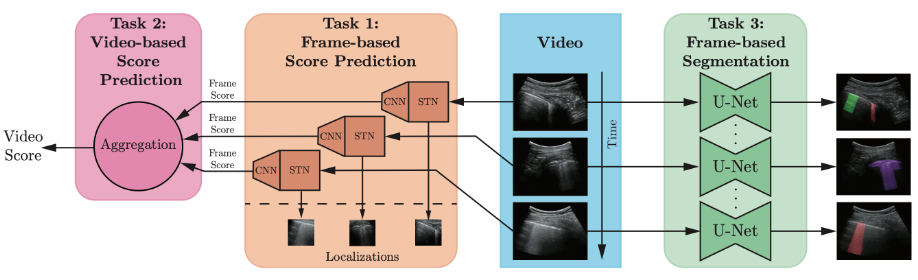

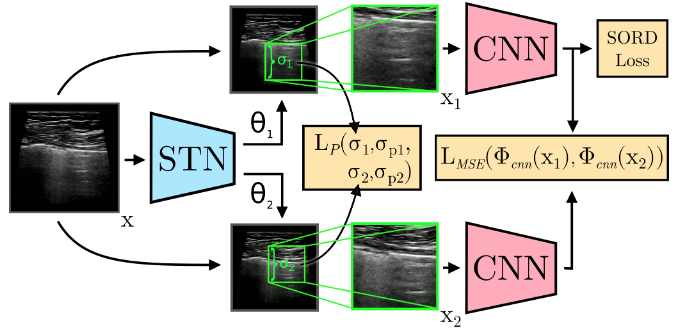

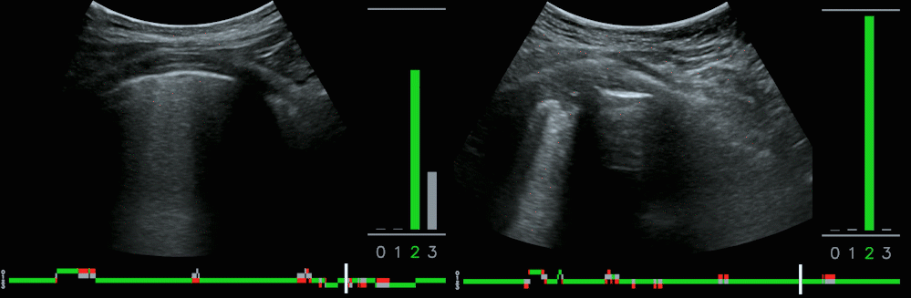

Deep Learning for Classification and Localization of COVID-19 Markers in Point-of-Care Lung Ultrasound

Subhankar Roy*, Willi Menapace*, Sebastiaan Oei, et al.

IEEE Transactions on Medical Imaging

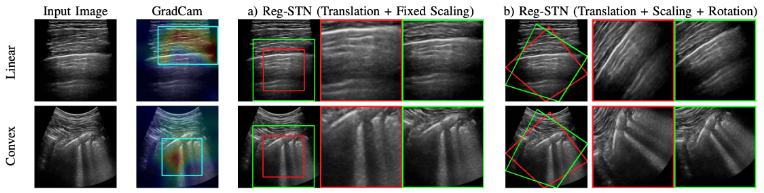

Deep learning (DL) has proved successful inmedical imaging and, in the wake of the recent COVID-19 pandemic, some works have started to investigate DL-based solutions for the assisted diagnosis of lung diseases. While existing works focus on CT scans, this paper studies the application of DL techniques for the analysisof lung ultrasonography (LUS) images. Specifically, we present a novel fully-annotated dataset of LUS images collected from several Italian hospitals, with labels indicating the degree of disease severity at a frame-level, video-level, and pixel-level (segmentation masks). ...

- 2024 - *: Research Scientist at Snap Inc.

- Spring - Winter 2023: Research Intern at Snap Inc. supervised by Sergey Tulyakov, Aliaksandr Siarohin

- Summer 2022: Research Intern at Snap Inc. supervised by Sergey Tulyakov, Aliaksandr Siarohin

- Spring 2021 - Spring 2022: Research Intern at Max Planck Institute for Informatics supervised by Christian Theobalt, Vladislav Golyanik

- Summer 2019: Intern, Deep Learning at eXact lab

- Spring 2019: External Collaborator, Deep Learning at eXact lab

- Summer 2017: Intern, CUDA/OpenCL Developer at eXact lab

- Summer 2014: Intern, Data Analyst/C# Programmer at Famas System

- Summer 2013: Intern, Software Engineering at FBK